October 03, 2023

Blog Publishing Platform

Overview:

The blog publishing platform is a web app where users can sign up and post blogs. The frontend was built with TypeScript, Next.js and Tailwind CSS. The Serverless backend was written in TypeScript using AWS API Gateway, AWS Lambda (Node.js) and AWS DynamoDB. The AWS Infrastructure was deployed using the SST framework and the CI/CD pipeline was on GitHub Actions.

The goal of the project was to build a solution where each and every moving part is split into components ensuring type safety, reusability and scalability.

Github Repository - https://github.com/cray-mx/blogcloud

Frontend:

I decided to use Next.js since the most important page on the app, home page, had to be server rendered to ensure Performance and SEO. The home page displayed all the blogs published by the logged in user and everyone else. If this were done in React.js, all the Javascript would be sent to the client and the content would be rendered on the browser using a hook like useEffect to fetch all the blogs. This results in a 'blank page' state for a while as the render is blocked by a fetch call.

It is therefore wise to render the content on the server and send the rendered HTML to the browser along with any Javascript for interactivity. This results in faster page loads and an overall better user experience. Since the webpage is rendered on the server, search engine crawlers can scrape the page for content to improve SEO. On the other hand, in React.js, an empty HTML page is sent by the server resulting in poor SEO.

This is how the home page was structured:

const Home = async (): Promise<JSX.Element> => {

const cookie = headers().get('cookie')

if (!cookie) {

redirect('/login')

}

const user = getUserFromCookie(cookie)

const blogs = await getAllBlogs()

const userBlogs = blogs ? blogs.filter(blog => blog.pk.replace('USER#', '') === user.email) : []

return (

<div className='relative h-screen bg-gray-300'>

<NavBar user={user}/>

{

userBlogs.length === 0 ?

(

<div className='w-full bg-slate-100 pt-2 pb-2 pl-8 pr-8'>

<span className='text-lg'>You have no posts yet!</span>

<Link href='/blog' className='text-lg ml-2 text-blue-500'>Create one</Link>

</div>

) :

(

<div className='flex flex-col justify-center items-center absolute w-1/6 h-1/6 bg-gray-300 shadow-md'>

<Link href='/blog' className='flex justify-center items-center'>

<span>{Create}</span>

<span className='text-2xl text-blue-900'>Create a post</span>

</Link>

</div>

)

}

{

blogs && blogs.length > 0 &&

(

<div className='flex flex-col items-center w-full h-[93%] pt-14 pb-14 pl-16 pr-16'>

{ blogs.map((blog) => <BlogCard key={blog.sk.replace('BLOG#', '')} blog={blog}/>) }

</div>

)

}

</div>

)

}

export default HomeYou can ignore userBlogs which is just a list of blogs belonging to the user. I'm using this value to show different prompts to the user. I verify if the user is logged in using the cookie, get all the blogs and map each blog to another component called BlogCard.

The BlogCard component is responsible for rendering the blog. All the styles and interactivity related to a blog item go into this component. You can start to see how this would help us scale our codebase.

const BlogCard: FC<{ blog: Blog }> = ({ blog }) => {

const { title, content, createdAt, sk, pk } = blog

dayjs.extend(localizedFormat)

const formattedDate = dayjs(createdAt).format('LL')

const userId = pk.replace('USER#', '')

const profileImage = getProfileImageUrl(userId)

const blogId = sk.replace('BLOG#', '')

const path = `/blog/${blogId}`

return (

<Link href={path} className='relative h-36 w-1/2 mb-14 p-4 bg-white hover:bg-gray-100 hover:cursor-pointer'>

<h2 className='text-2xl text-blue-900'>{title}</h2>

<span className='absolute right-3 top-2 text-blue-500'>{formattedDate}</span>

<Image

src={profileImage}

width={32}

height={32}

alt="Profile"

className="absolute right-1 bottom-1 mr-2 rounded-[100%]"

/>

<div className='mt-2'>

<span>

{content.substring(0, 200) && content.length > 200 && ' ...'}

</span>

<span className='text-green-700'>

{content.length > 200 && ' (click to read more)'}

</span>

</div>

</Link>

)

}

export default BlogCardThis displays a simple view of the blog in the shape of a card with the Title, creation date, profile image of the creator and first few lines in the blog. Clicking on the card takes you to a full page view of that blog. I'll cover how the userId and the blogId is stored in the DynamoDB section.

You can visit the repo and have a look at how the code is structured. Each and every route in the app exports a component in the page.tsx file . Types, common components, core packages and image assets are modularised. I have also created my own package for making fetch calls to keep my components clean. Check it out here frontend/src/core/customFetch.ts

You can create your own packages depending on your needs to keep the codebase well structured.

type CustomResponse = Omit<Response, 'json'> & {

json: () => Promise<{

status: 'success' | 'error'

message: string

data?: unknown

}>

}I use JSON adhering to a specific format to standardize the shape of the response across both frontend and backend. This helps preventing countless bugs that arise due to a mismatch between response shapes. This is one of the reasons why GraphQL was invented!

Next.js also comes with a handy server middleware which is perfect for authorising routes. The JWT token is stored in a cookie on the browser and the JWT secret is stored as an environment variable on the server.

export const middleware = async (request: NextRequest): Promise<NextResponse | undefined> => {

const { pathname } = new URL(request.url)

const cookie = request.cookies.get(AUTH_COOKIE)

const authorized = await isAuthorized(cookie)

switch (pathname) {

case '/home':

case '/profile':

case '/blog':

if (!authorized) return NextResponse.redirect(new URL('/login', request.url))

break

case '/signup':

case '/login':

if (authorized) return NextResponse.redirect(new URL('/home', request.url))

break

}

}

export const config = {

matcher: ['/home', '/login', '/signup', '/profile', '/blog']

}Backend:

I went with a complete serverless backend on AWS as it's pretty quick to setup and iterate over. I chose Amazon API Gateway to create the API's and AWS Lambda Functions as the request handlers / controllers. I wanted a fully managed NoSQL database to store all the blogs and went with Amazon DynamoDB because it scales very well and always responds in double digit millisecond latency.

I used the SST framework to provision all of the infrastructure. The framework is built on top of the AWS CDK and provides higher level constructs making it easy to define and maintain serverless infrastructure. It does this by compiling the constructs we write into AWS Cloudformation templates. These templates are then used to provision Cloudformation Stacks.

For example, you would define a DynamoDB table:

const mainTable = new Table(stack, 'main-table', {

fields: {

pk: 'string',

sk: 'string'

},

primaryIndex: {

partitionKey: 'pk',

sortKey: 'sk'

},

cdk: {

table: {

tableName: appSecrets.mainTable,

removalPolicy: appSecrets.stage !== 'production' ? RemovalPolicy.DESTROY : RemovalPolicy.RETAIN

}

}

})DynamoDB is a Key-Value NoSQL database much like how MongoDB is a document database. All the items in a table are divided into shards, also called partitions. The primary key is used to locate a particular partition. The primary key is composed of a partition key or a partition key + sort key. In the example, I'm using a partition key (pk) and a sort key (sk).

This allows for instant lookups when the primary key is known. When the partition key is known but sort key is unknown, then we can query a particular partition but have to return all the items in that partition. When the partition key itself is not known then we run into the dreaded scan operations which go through every partition in the table. This can not only be time consuming, but also very expensive as AWS bills us on the number of reads and writes. One item scanned = One item read.

Hence it is crucial to design a solid database schema where we can, at most times, query using the primary key. In a blog app, we have users and we have blogs. Users have IDs and so do blogs. I have used single table schema design wherein all the items are stored in one table.

I have used email address as the User ID. Hence users can be stored with their partition key (pk) set to their email address. They don't really need a sort key (sk) but since we are going with a single table design and blog items will require both pk and sk, we can set sk to their email address as well. Blogs on the other hand will have a unique ID as their partition key and the creator's email address as their sort key.

const user = {

pk: 'USER#rayaan@gmail.com',

sk: 'USER#rayaan@gmail.com'

}

const blog = {

pk: 'BLOG#595aeaa9-7ea5-46b7-869b-bd5376b05b35',

sk: 'USER#rayaan@gmail.com'

}Notice how I have used the prefixes 'USER#' and 'BLOG#'. This is just a nice way to let us know what kind of object we are dealing with. Blogs have a random UUID.

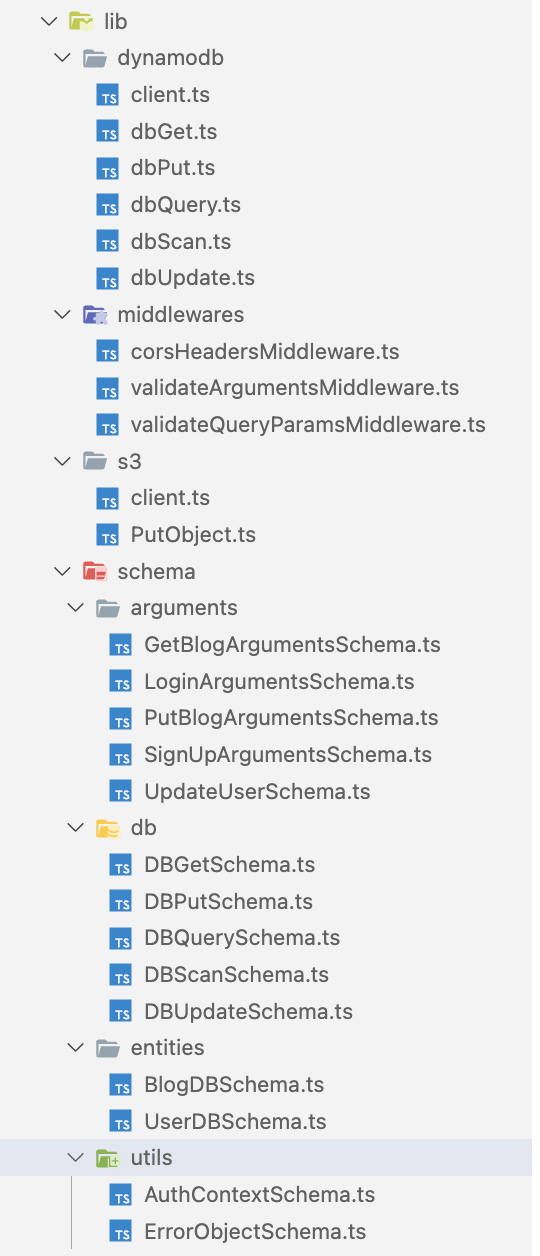

In terms of code structure, I have a lib folder which contains schemas, utilities and packages to interact with the DynamoDB and S3.

For example, a package to retrieve an item from the table given the primary key would look like:

export const dbGet = async<T>({

key,

table

}: DBGetSchemaType): Promise<T | undefined> => {

try {

const command = new GetItemCommand({

TableName: table,

Key: marshall(key)

})

const response = await client.send(command)

if (response.Item) {

return unmarshall(response.Item) as T

}

} catch (err) {

throw createHttpError(500, httpResponses[500])

}

}This helps is code reusability and makes your HTTP handlers cleaner to read.

I'm using Superstruct which is an awesome library for type level validation. All Database schemas, Utility function arguments, Success/Error response shapes are written in it. For example, this is the shape of a user object:

export const BlogDBSchema = object({

pk: string(),

sk: string(),

title: string(),

content: string(),

uid: string(),

createdAt: string(),

updatedAt: string()

})

export type BlogDBSchemaType = Infer<typeof BlogDBSchema>Now, let's take a look at the Lambda function handlers themselves. Each and every route in the API is tied to a Lambda function as follows:

const api = new Api(stack, 'api', {

cors: {

allowOrigins: ['https://blogcloud.vercel.app', 'http://localhost:3000'],

allowCredentials: true,

allowHeaders: ['content-type'],

allowMethods: ['GET', 'POST'],

exposeHeaders: ['token'],

maxAge: '30 minutes'

},

authorizers: {

customAuthorizer: {

type: 'lambda',

function: authorizerFunction,

responseTypes: ['simple'],

identitySource: ['$request.header.Cookie'],

resultsCacheTtl: '30 minutes'

}

},

routes: {

'POST /login': 'src/functions/users/login.handler',

'POST /signup': 'src/functions/users/signup.handler',

'POST /blog': {

authorizer: 'customAuthorizer',

function: 'src/functions/blogs/putBlog.handler'

},

'POST /profile': {

authorizer: 'customAuthorizer',

function: 'src/functions/users/profile.handler'

},

'GET /blogs': {

authorizer: 'customAuthorizer',

function: 'src/functions/blogs/getAllBlogs.handler'

},

'GET /my/blogs': {

authorizer: 'customAuthorizer',

function: 'src/functions/blogs/getMyBlogs.handler'

},

'GET /blog': {

authorizer: 'customAuthorizer',

function: 'src/functions/blogs/getBlog.handler'

}

}

})The custom authorizer is a Lambda function whose sole purpose is to authenticate requests. The results are cached on API Gateway for 30 mins. As you can see, each and every route is mapped to a handler file. Let's look at the getBlog.handler file to get a particular blog:

type Event = Omit<APIGatewayProxyEventV2WithLambdaAuthorizer<AuthContextSchemaType>, 'queryStringParameters'> & {

queryStringParameters: GetBlogArgumentsSchemaType

}

const getBlogHandler: Handler<Event, APIGatewayProxyResultV2> = async (event) => {

try {

const pk = `USER#${event.requestContext.authorizer.lambda.email}`

const { id } = event.queryStringParameters

const sk = `BLOG#${id}`

const item = await dbGet<BlogDBSchemaType>({

table: appSecrets.mainTable,

key: {

pk,

sk

}

})

return {

statusCode: 200,

body: JSON.stringify({

status: 'success',

message: 'ok',

data: item ?? null

})

}

}

catch (err) {

if (checkValidError(err)) {

throw err

}

throw createHttpError(500, httpResponses[500], { expose: true })

}

}

export const handler = middy(getBlogHandler)

.use(validateQueryParamsMiddleware({ schema: GetBlogArgumentsSchema }))

.use(httpErrorHandler())I'm can use pk since the email address is present in the JWT token's payload. The blog ID is passed from the frontend using query params. It uses the dbGet package which I showed earlier to retrieve the item. I'm using the middy package for attaching middlewares to my Lambda functions. Error handling is done by throwing any possible known errors at runtime and having an error handler middleware resolve the error message and return a JSON object. In case of unknown crashes, the error message returned is a generic 'Something went wrong'.

Continuous Integration and Deployment:

The Next.js project is deployed on Vercel and it has automatic deployments on every git push. As with AWS, I have written a Github Action that checks for lint issues, dependency issues and deploys to production on every push to main.

name: Deploy to AWS

on:

push:

paths:

- 'backend/'

branches:

- 'main'

jobs:

deploy:

runs-on: ubuntu-latest

defaults:

run:

working-directory: backend

env:

STAGE: production

AWS_LOCAL_REGION: ${{ secrets.AWS_REGION }}

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Install Dependencies

run: npm ci

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_LOCAL_REGION }}

- name: Check for lint

run: npm run lint

- name: Check for compilation errors

run: npm run typecheck

- name: Check for dependency issues

run: npm run depcheck

- name: Get Envs from SSM

run: |

node ci/getEnvs.js

source .env

rm -f .env

- name: Deploy to AWS

run: npm run deployI have a separate node script to fetch and load all environment variables required for my infra to deploy from AWS Parameter store:

import { SSMClient, GetParametersCommand } from '@aws-sdk/client-ssm'

import { writeFileSync } from 'fs'

import { isCI } from 'ci-info'

const createEnvExpressions = (parameters) => {

let env = ''

for (const param of parameters) {

const name = param.Name.split('/')[2].replaceAll('-', '_').toUpperCase()

if (isCI) {

env += `echo "${name}=${param.Value}" >> $GITHUB_ENV\n`

env += `echo "::add-mask::${param.Value}"\n`

} else {

env += `export ${name}="${param.Value}" \n`

}

}

return env

}

const getEnvs = async () => {

const stage = process.env.STAGE

const region = process.env.AWS_LOCAL_REGION

const client = new SSMClient({

region

})

if (!stage) {

throw new Error('$STAGE not defined')

}

const ssmParameters = [

`/${stage}/aws-account-id`,

`/${stage}/auth-issuer`,

`/${stage}/auth-audience`,

`/${stage}/auth-secret`,

]

const command = new GetParametersCommand({

Names: ssmParameters

})

const response = await client.send(command)

const env = createEnvExpressions(response.Parameters)

writeFileSync('.env', env)

}

getEnvs().catch((err) => {

console.log('Could not get envs from SSM', err)

process.exit(1)

})Learnings:

I did face quite a few challenges with some parts of the app. For example, Next.js 13 has the new app router and includes client and server components. There were times where I wanted to render some parts of the page on the server and some on the client. Next.js does not support Islands Architecture like how Astro does. At the time of developing this, Next.js's new app router was not yet production ready and was a bit buggy as well.

Overall, I have learnt a lot from the challenges faced. I have developed the app keeping good coding practises in mind and hence feel like I've been able to create a robust, scalable and maintainable system.

728 views