June 09, 2025

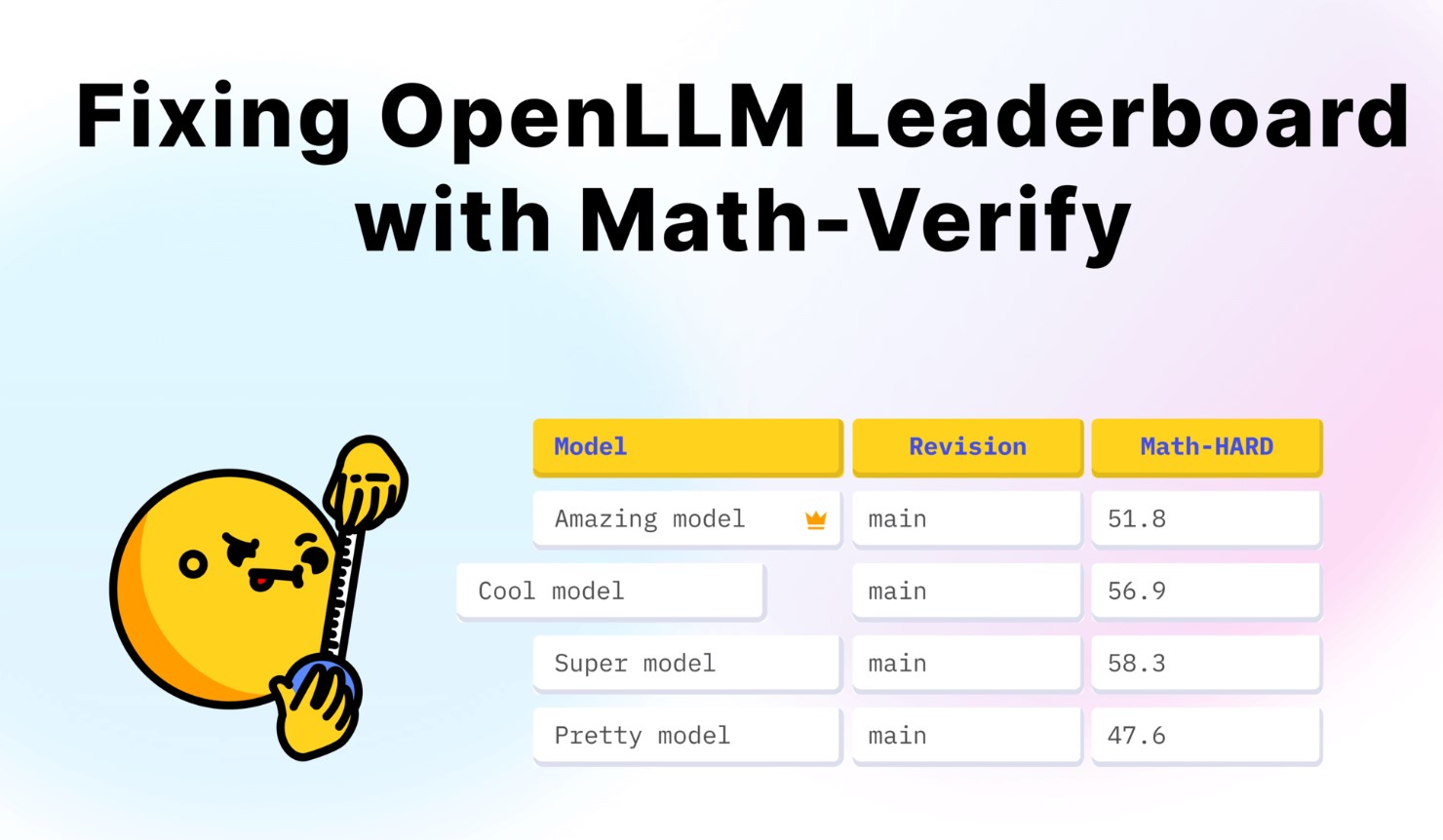

Fixing Open LLM Leaderboard with Math-Verify

Fixing Open LLM Leaderboard with Math-Verify

Have you ever thought about whether the AI models at the top of the rankings really are the best at math calculations? Imagine if I told you that many of these scores were wrong until recently? It was wrongly punishing some models because they did not answer in a certain way?

The Open LLM Leaderboard, the leading rating system for open Large Language Models, has this problem. The issue? Incorrect math evaluation. Formatting errors, poor parsing, and inaccurate symbolic comparisons were marking valid models wrong.

Now this is where Math-Verify comes in. It transforms model ranking by fixing evaluation flaws and focusing on performance rather than technicalities. I will explain Math-Verify, why it is necessary, and how to implement it with a few lines of code in this post. Let's dive in.

Why Math Evaluation on Open LLM Leaderboard Was Broken

AI model benchmarking is best using the Open LLM Leaderboard. It tests performance on MATH-Hard, a dataset containing 1,324 challenging math questions in algebra, calculus, and number theory.

This might be useful for comparing AI models. It was a failure in practice.

What's the main issue? The leaderboard needed answers in a certain way. Even little variations in wording, formatting, spacing, or context led to incorrect responses from models. Suppose an AI solves a problem successfully but adds "The ultimate answer is [ANSWER]."

It's an instant failure.

Things got worse. Even when models followed the standard, the leaderboard parser frequently misinterpreted mathematical expressions. Misreading fractions, radicals, and even elementary algebraic problems resulted in false negatives; correct solutions marked wrong.

Here's an example:

- Correct Answer: 14+7214 + 7\sqrt{2}14+72

- Model Output: 72+147\sqrt{2} + 1472+14

- Leaderboard's Verdict: Incorrect

Although both expressions are technically identical, the system could not distinguish them. This unjustly lowered several models' ratings.

Something has to change.

What is Math-Verify?

We really needed Math-Verify. It evaluates replies mathematically rather than syntactically using symbolic computing instead of string matching.

SymPy, a Python symbolic mathematics library, powers Math-Verify. The leaderboard can:

- Correctly parse mathematical expressions

- Automatically simplify equivalent expressions

- Handle various answer formats and notations

- Identify appropriate alternative answers

Math-Verify evaluates AI models based on math skills, not response structure.

The best part? Implementation is absurdly easy.

Fixing the Evaluation: Implementing Math-Verify

Math-Verify is not only for AI researchers. You may try its math answer evaluation with a few Python lines. Write a basic SymPy function:

from sympy import simplify, symbols

def verify_math_answer(model_output, correct_answer):

x = symbols('x') # Define any symbols dynamically

try:

return simplify(model_output) == simplify(correct_answer)

except Exception as e:

return f"Error parsing: {e}"

# Example usage

model_output = "7*sqrt(2) + 14"

correct_answer = "14 + 7*sqrt(2)"

print(verify_math_answer(model_output, correct_answer)) # Should return True

Let me explain you what we did:

- We use SymPy's simple function to compare expressions mathematically.

- If two expressions provide the same result, they are equal, even if they seem differently in text.

- The function now produces an error message instead of failing silently in case of parsing errors.

Just what the Open LLM Leaderboard needs. Math-Verify integration had a huge effect.

The Impact of Math-Verify on Leaderboard Rankings

Math-Verify re-evaluated all leaderboard models. The outcome? Complete ranking reshuffle.

On average, models answered 61 more tasks successfully, increasing scores by 4.66 points. Some models' ranks skyrocketed, revealing their genuine skills.

For instance, misread DeepSeek models substantially tripled their results. Qwen models contained boxed notations that the previous evaluator missed.

The new leaderboard king? Nvidia's AceMath models lead MATH-Hard ranks after a fairer examination.

Math-Verify transformed the game, not just the scoreboard.

Final Words and Future of LLM Math Evaluation

Math-Verify is essential for AI model development and evaluation. Using Math-Verify assures fair, accurate, and trustworthy math assessments for new LLMs or double-checking old ones.

The Open LLM Leaderboard is better than ever, but it can be better. Future model variants may improve complex mathematical reasoning evaluation or enable more advanced notations.

Check out the new Open LLM Leaderboard rankings to see how your favorite AI models do under Math-Verify. If your AI project involves mathematics, use Math-Verify now.

Stop rating models by how they style their responses and start ranking them by math ability.

749 views