May 12, 2025

How to Use Python for Real-Time Data Streaming with Kafka

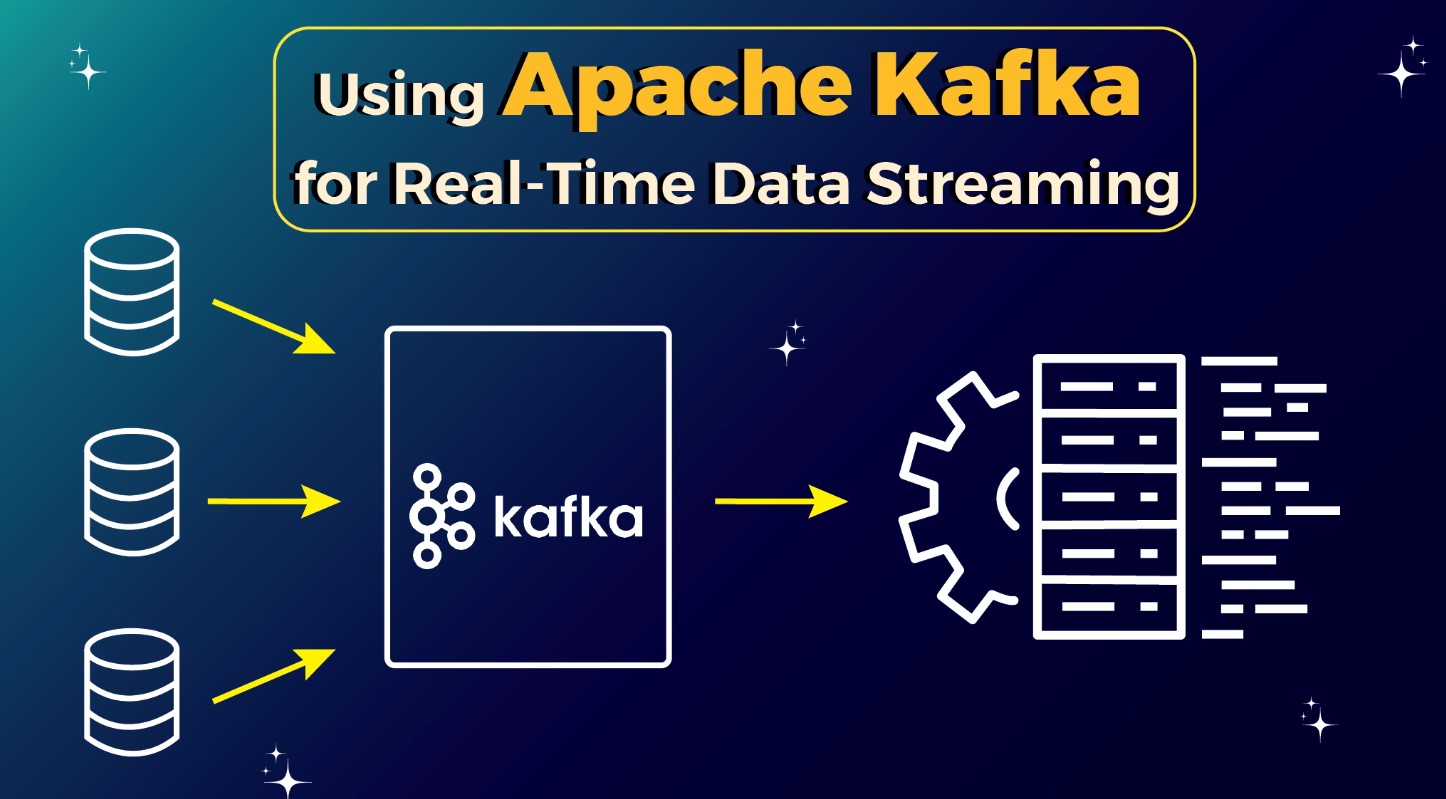

Real-time data processing and response is crucial in today's fast-paced digital environment. Website user activity monitoring, IoT sensor data processing, and stock market trend analysis all need real-time data streaming. You have come to the right place to learn how to create this magic. Python with Apache Kafka provide strong real-time data pipelines.

What is Apache Kafka?

First, let's analyze Kafka before learning how. Apache Kafka is an open-source distributed event-streaming technology that handles enormous data with fault tolerance and scalability.

The core of Kafka are producers (data senders), consumers (data receivers), topics (data categories), and brokers. Kafka is a fast messaging system for managing real-time data streams.

Setting Up Kafka and Python

Install a Kafka server for the start. You may install Kafka locally or utilize Confluent Cloud. To interact with Kafka in Python, use kafka-python or confluent-kafka.

Here's a quick step-by-step:

- 1. Install Kafka: Install a server using the Kafka documentation.

- 2. Install Python Libraries: Install Kafka client library using pip:

pip install kafka-python

- 3. Set Up a Basic Producer:

from kafka import KafkaProducer

producer = KafkaProducer(bootstrap_servers='localhost:9092')

producer.send('test-topic', b'Hello, Kafka!')

producer.close()

- 4. Set Up a Basic Consumer:

from kafka import KafkaConsumer

consumer = KafkaConsumer('test-topic', bootstrap_servers='localhost:9092')

for message in consumer:

print(f"Received: {message.value.decode()}")

You may now send and receive data!

Creating a Real-Time Data Pipeline with Python and Kafka

Let's design a real-time pipeline sequentially. Consider streaming IoT sensor temperature data.

Step 1: Define the Data Source

Simulate data to simplify. Here's an example:

import random

import time

def generate_sensor_data():

while True:

yield {'sensor_id': 1, 'temperature': random.uniform(20.0, 30.0)}

time.sleep(1)

Step 2: Write the Kafka Producer

Send this data to Kafka:

from kafka import KafkaProducer

import json

producer = KafkaProducer(

bootstrap_servers='localhost:9092',

value_serializer=lambda v: json.dumps(v).encode('utf-8')

)

for data in generate_sensor_data():

producer.send('sensor-data', data)

print(f"Sent: {data}")

Step 3: Write the Kafka Consumer

Process the data in real time:

from kafka import KafkaConsumer

consumer = KafkaConsumer(

'sensor-data',

bootstrap_servers='localhost:9092',

value_deserializer=lambda v: json.loads(v.decode('utf-8'))

)

for message in consumer:

data = message.value

print(f"Processing: {data}")

This is a basic real-time pipeline!

Use Cases for Real-Time Data Streaming

- Monitoring systems: Quickly find any issues or unusual activities.

- Real-Time Dashboards: Robust analysis for real-time updates.

- IoT Applications: Accept sensor input from connected devices.

Best Practices for Using Python with Kafka

- Batching and Compression: Make big datasets run faster by compressing and batching them.

- Manage Offsets: Commit buyer offsets to avoid losing data.

- Go Asynchronous: Think about using asynchronous code to manage high traffic more effectively.

- Secure the Pipeline: Secure the pipeline using encryption and authentication to safeguard data stream.

Conclusion

Python and Kafka let you exploit the power of real-time data streaming, which has changed how we manage information. From installing a Kafka server to building producers and consumers, the procedure is simple yet flexible.

Ready to move ahead? Integrate Kafka Streams or Apache Spark for proficient data processing. A few Python lines create real-time magic!

759 views