October 30, 2024

Understanding and Implementing SVMs for Classification

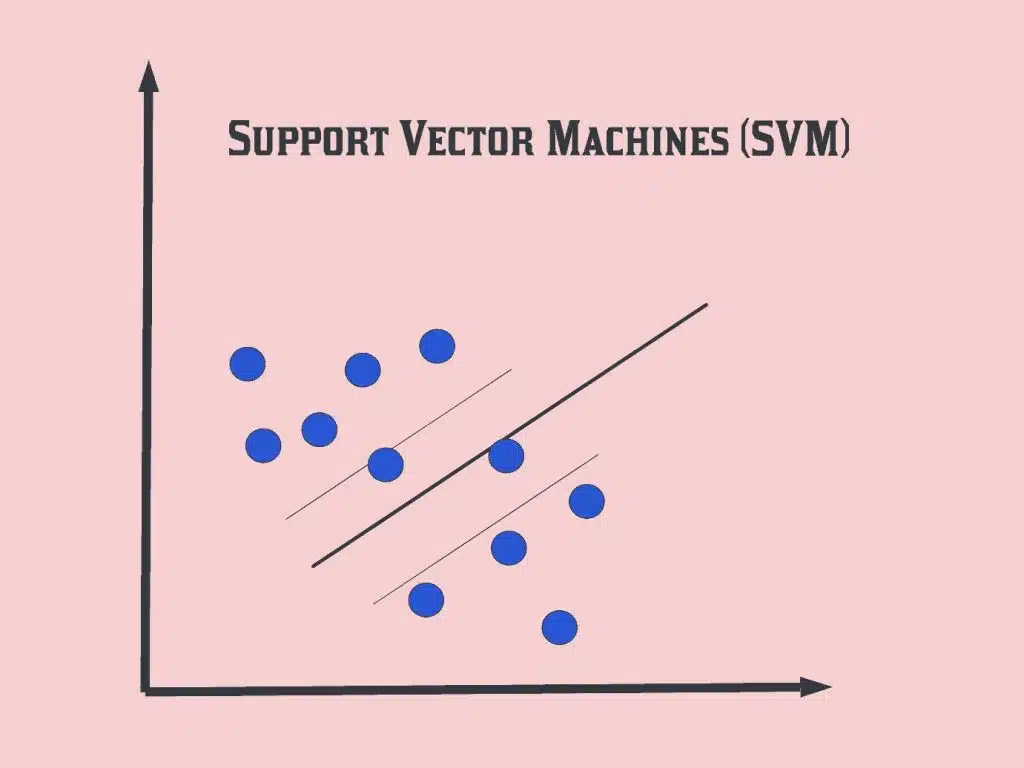

Isn't it worth considering that how machines can classify your given input with a high accuracy? Here comes SVMs (Support Vector Machines). These are widely popular and robust in machine learning for all your classification tasks. SVMs have expertise in providing absolute boundaries within different classes and helps to handle high-dimensional data. To make your concepts clear, in this article, I've come up with basics of SVMs, their working, and steps to implement them in Python.

How SVM Works: The Basics

SVMs at its core, separates data using hyperplane. Let's suppose there's a multi-dimensional space where different data points represent different classes. Here SVMs finds the optimum hyperplane that helps to separate these classes in meantime maximizing the margin. And the data points that are nearest to the hyperplane are support vectors. These support vectors are very important for defining decision boundaries.

Mathematical Foundation of SVM

Maximizing the margin between two classes is SVM's main goal and optimization techniques like Lagrange multipliers help it to achieve this goal. SVM's robust and efficient feature kernel trick makes it possible to work with both linear and non-linear data. Moreover, Support Vector Machines can itself identify a perfect hyperplane for separating data.

Types of Kernels in SVM

Picking the right kernel is very important for SVM to work well. Here are some of its most used types:

- Linear Kernel: Best for data that can be separated linearly.

- Polynomial Kernel: Useful for relationships that are complex.

- Radial Basis Function (RBF): Great for non-linear data.

- Sigmoid Kernel: This is like an activation function for a neural network.

Figuring out what your data means can help you choose the right kernel for classification jobs.

Implementing SVM for Classification

Now, let's use the Scikit-learn library to set up SVM in Python with a simple code example (Iris Dataset).

# Importing necessary libraries

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import classification_report, confusion_matrix

# Loading the Iris dataset

iris = datasets.load_iris()

X = iris.data[:, :2] # Using only the first two features for visualization

y = iris.target

# Splitting the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Creating and training the SVM model

model = SVC(kernel='linear')

model.fit(X_train, y_train)

# Making predictions

y_pred = model.predict(X_test)

# Evaluating the model

print(confusion_matrix(y_test, y_pred))

print(classification_report(y_test, y_pred))

# Visualizing the results

plt.scatter(X_train[:, 0], X_train[:, 1], c=y_train, marker='o', label='Training Data')

plt.scatter(X_test[:, 0], X_test[:, 1], c=y_test, marker='x', label='Test Data')

plt.title('SVM Classification')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.legend()

plt.show()

This piece of code shows how to use SVM for separating the Iris dataset into groups. To do this, you need to load the dataset, divide it into training and test sets, make a linear SVM model, and use a confusion matrix and a classification report to see how well it works.

Benefits and Limitations of SVM

There are several benefits to SVMs. They work well in spaces with a lot of dimensions, give clear margins, and work well with small datasets. However, they can be hard to use when classes overlap and can be hard to compute for big datasets. For the best speed, feature scaling is a must.

Conclusion

Overall, Support Vector Machines are a strong way to separate classes that works well in both linear and nonlinear situations. You can use SVMs for a variety of machine learning tasks if you understand how they work and know how to build them in Python. Don't forget to fine-tune the parameters for the best results, and this powerful algorithm will be easy for you to understand.

900 views